Top Data→AI News

📞 What If Your Fairness Algorithm Only Works on One Dataset? Meet FairGround, the Infrastructure That Makes Fair ML Generalizable

src:fairground

I recently reviewed FAIRGAME framework that uses game theory to expose biases that only emerge when AI agents strategically interact with each other (read it here). That groundbreaking research showed us a completely new dimension of AI bias one that appears during multi-agent interactions, not just in training data.

In my journey reviewing fairness and biases, I discovered that fairness research has exploded with innovative approaches—game theory, causal inference, adversarial testing. Yet each study uses different datasets, processed differently, evaluated differently. It's not that the research is flawed it's that we're all working in parallel universes that can't talk to each other."

Before the breakthrough of ImageNet, computer vision researchers all used different image datasets with different preprocessing. Progress was slow and chaotic. ImageNet standardized everything, and suddenly we got deep learning breakthroughs that changed the world.

Now imagine this same scenario: You're trying to build a fairer AI system for loan approvals. You read 10 cutting-edge papers on bias mitigation. Each uses different datasets, processed differently, split differently, with different fairness definitions. When you try to reproduce their results or compare which method actually works best you can't. The foundation is quicksand.

This creates two fundamental problems:

The Credibility Crisis: We can't tell which fairness methods actually work because every study uses different data preparation approaches. One researcher's "fair" model might be another's biased disaster but we'd never know.

The Innovation Bottleneck: Researchers waste months preprocessing datasets instead of advancing fairness techniques like FAIRGAME. Every team reinvents the wheel, introducing inconsistencies that contaminate results.

But I have good news: new research from Jan Simson, Alessandro Fabris, Cosima Fröhner, Frauke Kreuter, and Christoph Kern from a team at the University of Mannheim, University of Padova, and Ludwig Maximilian University of Munich just released a breakthrough framework. It's called FairGround (Framework for Robust and Reproducible Research on Algorithmic Fairness) and I have read it this could standardize fairness research the way ImageNet standardized computer vision.

Key Highlights:

🗂️ 44 Standardized Datasets, One Unified Framework: FairGround provides a curated corpus of tabular datasets spanning healthcare, criminal justice, finance, education, and employment—all with rich fairness-relevant metadata. No more hunting for data or wondering if your dataset is representative.

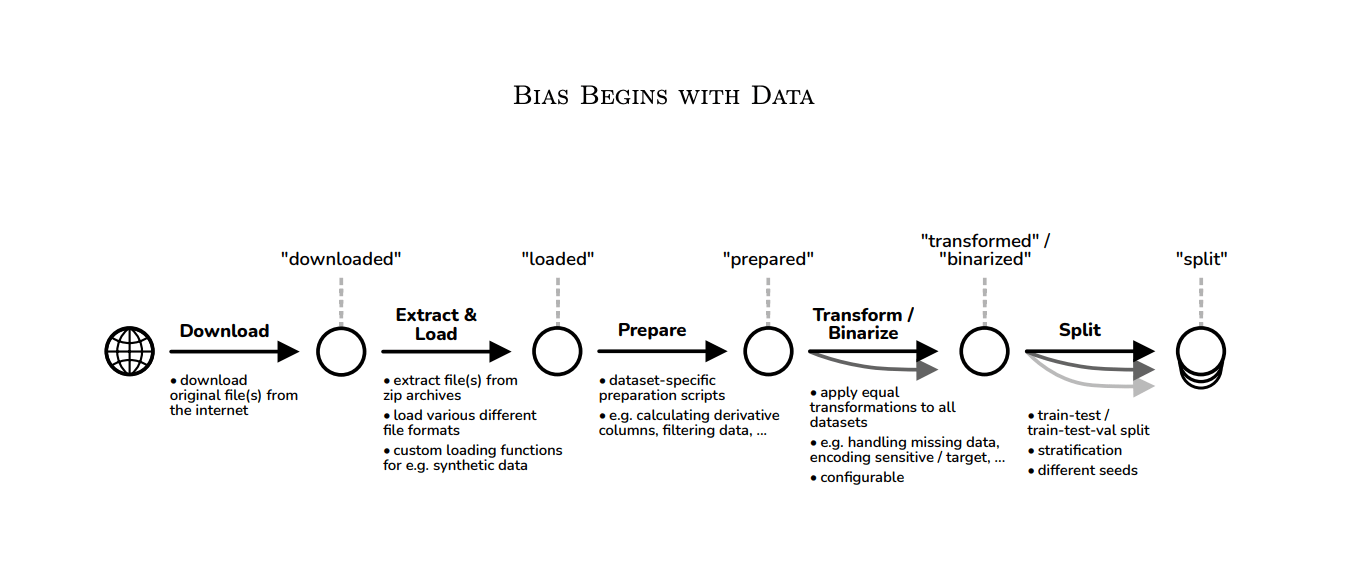

🐍 Python Package That Actually Works: Standardized dataset loading, preprocessing, transformation, and splitting. One line of code replaces weeks of data wrangling. Compatible with major ML libraries. Open source and free forever.

D📊 Reproducibility Built Right In: Every dataset comes with documented preprocessing steps, fairness-sensitive attributes clearly labeled, and consistent train-test splits. Finally, researchers can compare apples to apples instead of apples to mysteriously-processed oranges.

🔄 End the Arbitrary Dataset Selection: The paper exposes how most fairness research relies on the same 5-10 datasets (Adult, COMPAS, German Credit) chosen arbitrarily decades ago. FairGround provides 44 diverse datasets, ensuring your fairness technique works beyond the usual suspects.

🎯 Metadata That Matters: Each dataset includes information on sensitive attributes, fairness concerns, domain context, data collection methods, and known limitations. You know exactly what you're working with and what ethical considerations apply.

Why It Matters:

For Researchers: No more wasting months on data preprocessing. Compare your fairness method against others reliably. Build on reproducible foundations. Publish results that others can actually verify and extend.

For Developers: Build fairer systems with confidence. Access battle-tested datasets that reflect real-world fairness challenges. Understand exactly how your algorithm performs across different demographic groups and contexts.

For the Fairness Community: Finally, we can have meaningful debates about which fairness approaches actually work. No more "my dataset says X, yours says Y" arguments. Standardized evaluation = real progress.

For Society: This isn't just academic housekeeping. Biased AI systems are deployed in hiring, lending, healthcare, and criminal justice right now. FairGround enables researchers to develop fairness techniques that are proven to work across diverse contexts not just cherry-picked datasets.

For Reproducible Science: The broader AI field faces a reproducibility crisis. FairGround sets the standard for how to do this right: open data, standardized tooling, transparent documentation, and community collaboration.

Paper: Read More | Code: GitHub Repository