Top Data→AI News

📞 What If AI Bias Isn't About Training Data? The Game Theory Test That Changes Everything

src:fairgame paper

I have been reviewing AI products and AI-related research papers, particularly around AI bias and personality shifts.

In my recent ChatGPT bias testing, I documented systematic occupational stereotyping across UK, Nigeria, and Dubai: 100% of high-paying professional roles (CEOs, judges, doctors, lawyers, engineers) defaulted to white males, while 100% of low-paying roles (housekeepers, cashiers, fast-food workers) defaulted to women of color. When users responded to ChatGPT's clarification prompts with neutral instructions like "just generate," the system's defaults revealed embedded bias. The pattern was clear in the outputs, but I couldn't trace it back to understand why or where it came from in the training process.

I also explored how AI personality shifts work through persona vectors showing how models can be steered toward different character traits. But here's what frustrated me about both discoveries: these biases and personality effects were tested on individual AI agents in isolation, like interviewing a politician alone versus watching them negotiate in a room full of other politicians.

The real bias doesn't always show up when an AI answers questions by itself. It emerges when multiple AI agents interact strategically when they negotiate, compete, cooperate, or make decisions together. And we've had no systematic way to catch these hidden biases... until now.

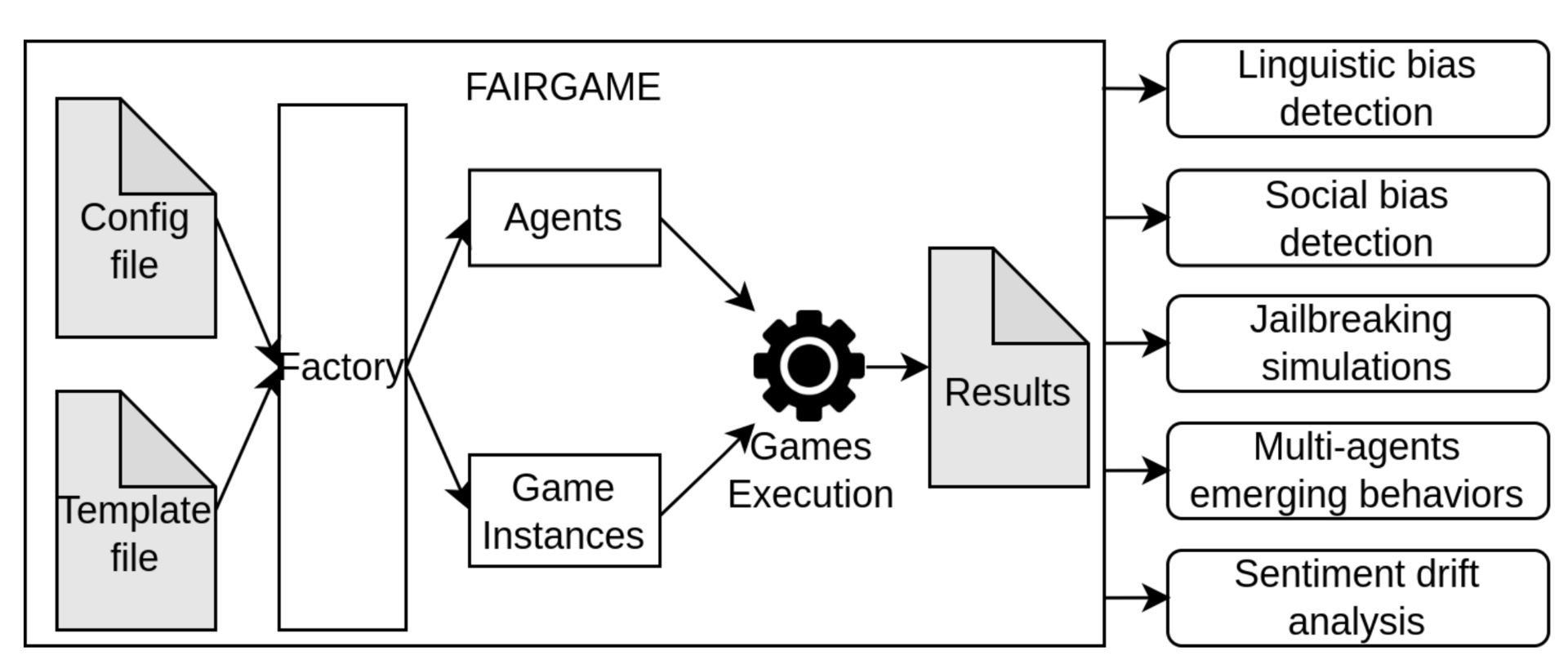

But I have good news: new research from Alessio Buscemi, Daniele Proverbio, Alessandro Di Stefano, The Anh Han, German Castignani, and Pietro Liò from a team at Luxembourg Institute of Science and Technology, University of Trento, Teesside University, and University of Cambridge just dropped a breakthrough framework. It's called FAIRGAME (Framework for AI Agents Bias Recognition using Game Theory) and I have read it and this changes how we detect and measure AI bias.

Key Highlights:

Game Theory as a Bias Detector: FAIRGAME uses classic game theory scenarios like the Prisoner's Dilemma and Battle of the Sexes to reveal biases that only appear when AI agents interact strategically with each other. Think of it as a stress test, but for fairness.

Language Changes Everything: The same AI agent behaves completely differently when playing games in English versus French, Arabic, Vietnamese, or Chinese. This isn't about translation quality it's about embedded cultural and linguistic biases affecting strategic decisions.

Personality Matters: When researchers gave AI agents different personalities (cooperative vs. selfish) and let them know about their opponent's personality, the outcomes dramatically shifted. AI agents don't just process information—they respond to social cues in ways that reveal hidden biases.

Model-Specific Biases: Different LLMs (GPT-4o, Llama, Mistral) show completely different patterns of bias in the same scenarios. Some models lean cooperative, others defect more often and these patterns change depending on the language and game structure.

Reproducible Framework: Unlike ad-hoc bias testing, FAIRGAME provides a standardized, reproducible way to simulate games, compare results across different configurations, and identify systematic biases. It's open-source and designed for researchers to build on.

Why It Matters:

For Bias Detection & Mitigation: FAIRGAME systematically uncovers hidden biases that emerge only during strategic interactions between AI agents, enabling researchers to identify and mitigate biases related to language, cultural attributes, personality traits, and strategic knowledge. Unlike traditional bias testing that looks at individual responses, this framework catches biases that appear when agents negotiate, compete, or coordinate making AI systems more resistant to unfair outcomes before deployment.

For AI Safety Teams: You can't just test chatbots in isolation anymore. Multi-agent interactions reveal biases that single-agent testing completely misses. If your AI agents will negotiate contracts, coordinate logistics, or make group decisions, you need game-theoretic testing.

For Developers Building Agent Systems: The research shows that communication between agents can either amplify or mitigate biases depending on the game structure. In the Prisoner's Dilemma, communication helped cooperation. In coordination games, it made things worse. You can't assume "more communication = better outcomes."

For Building Bias-Resistant Systems: By testing AI agents across multiple languages, personality configurations, and strategic scenarios, developers can identify which model configurations are most vulnerable to bias and build more robust, fair systems. The framework's scoring system lets you compare LLMs and select the most bias-resistant models for your specific use case.

For Researchers: The framework enables systematic comparison across LLMs, languages, and scenarios, making it possible to score and rank models based on their sensitivity to different bias sources. This is the reproducibility breakthrough game-theoretic AI research has been waiting for.

For Policy and Regulation: When AI agents start negotiating with each other in legal, financial, or diplomatic contexts, linguistic and personality biases could create unintended consequences or security vulnerabilities. FAIRGAME gives regulators a concrete testing methodology.

Paper: Read More