Top Data→AI News

📞 What If We Could Run AI Models Backward? Meet SipIt, the Algorithm That Traces Bias to Its Source

In my recent ChatGPT bias testing, I documented systematic occupational stereotyping, CEOs were consistently portrayed as white while cashiers showed racial diversity. But here's what frustrated me: I could see the bias in the outputs, but I couldn't trace it back to understand why or where it came from in the training process.

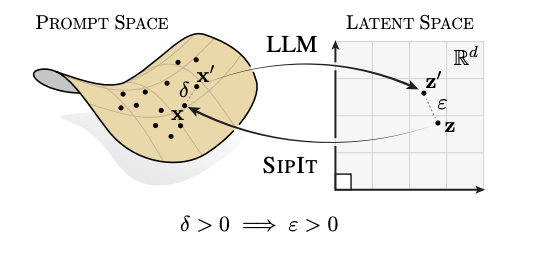

This frustration is shared across the research community. For years, researchers have been bothered by neural networks' "black box" nature where information gets lost and scrambled. You can't reverse them. You can't trace back from the output to see exactly what input created it. The math even seems to confirm this: non-linear activations and normalization layers are "non-injective," meaning different inputs could theoretically produce the same output.

This creates two major problems:

Security nightmare: If you can't reverse an LLM, you can't prove what training data it memorized or whether it's leaking sensitive information.

Interpretability dead-end: If information is lost in the layers, how can we ever truly understand what the model learned?

But I have good news: Researchers Giorgos Nikolaou, Tommaso Mencattini, Donato Crisostomi, Andrea Santilli, Yannis Panagakis, and Emanuele Rodolà from a team at University of Rome, EPFL, University of Athens, and Archimedes RC introduce SipIt, the first algorithm that reconstructs original text from hidden activations.new research just dropped, and it completely flips this assumption. It's called "Language Models are Injective and Hence Invertible" and I've read it, the findings are mind-blowing.

Key Highlights:

LLMs Are Actually Lossless: Despite having non-injective components like ReLU and LayerNorm, the paper proves mathematically that transformer language models are injective meaning every unique input maps to a unique output. No information is lost.

Billions of Tests, Zero Collisions: They ran collision tests on 6 state-of-the-art models (including Llama 3.1, Mistral, Gemma) across billions of attempts. Result? Not a single collision found. The math holds up in practice.

Perfect Reconstruction Possible: They created SipIt, the first algorithm that can reverse any LLM's hidden activations back to the exact original input text guaranteed, in linear time. You can literally run the model backward now.

Why It Matters:

The Privacy Implications Are Huge

If LLMs are invertible, we can now definitively answer: "Did this model memorize my private data?" Run the inversion algorithm, and you'll know exactly what training examples the model can reconstruct. No more guessing about privacy leaks—we can prove it mathematically.

Bias Detection and Fairness in Algorithms

This changes everything for AI bias research. If we can reverse engineer exactly which training examples influenced specific outputs, we can trace biased decisions back to their source. When a model makes a discriminatory prediction, we can now see exactly what training data led to that behavior. No more treating bias as an invisible property we can pinpoint the problematic patterns in the training set, understand how they propagate through layers, and potentially remove them. For fairness auditing, this means we can prove whether a model learned from biased data rather than just observing biased outputs.

Interpretability Just Got Real

For years, we've said "we can't understand neural networks because information gets lost in the layers." This research shows that's wrong. Every intermediate representation preserves complete information about the input. This means we can trace any output back through the layers to see exactly how the model processed it no information loss to hide behind.

Foundation Model Security

Adversaries could potentially use SipIt to extract training data from deployed models. But defenders can use the same tool to audit their models before deployment, identify memorized sensitive data, and remove it. The era of "security through obscurity" in LLMs is over now we need real defenses.

Rethinking Model Architecture

If transformers are inherently injective despite non-injective components, what does this tell us about how to design better models? Maybe we've been worried about the wrong things. The research opens up new questions about what architectural properties actually matter for performance versus which are just historical accidents.

Paper: Read More