Top Data→AI News

📞The Tiny AI Model That Just Killed the "Bigger is Better" - Hierarchical Reasoning Model (HRM)

source:arxiv.org

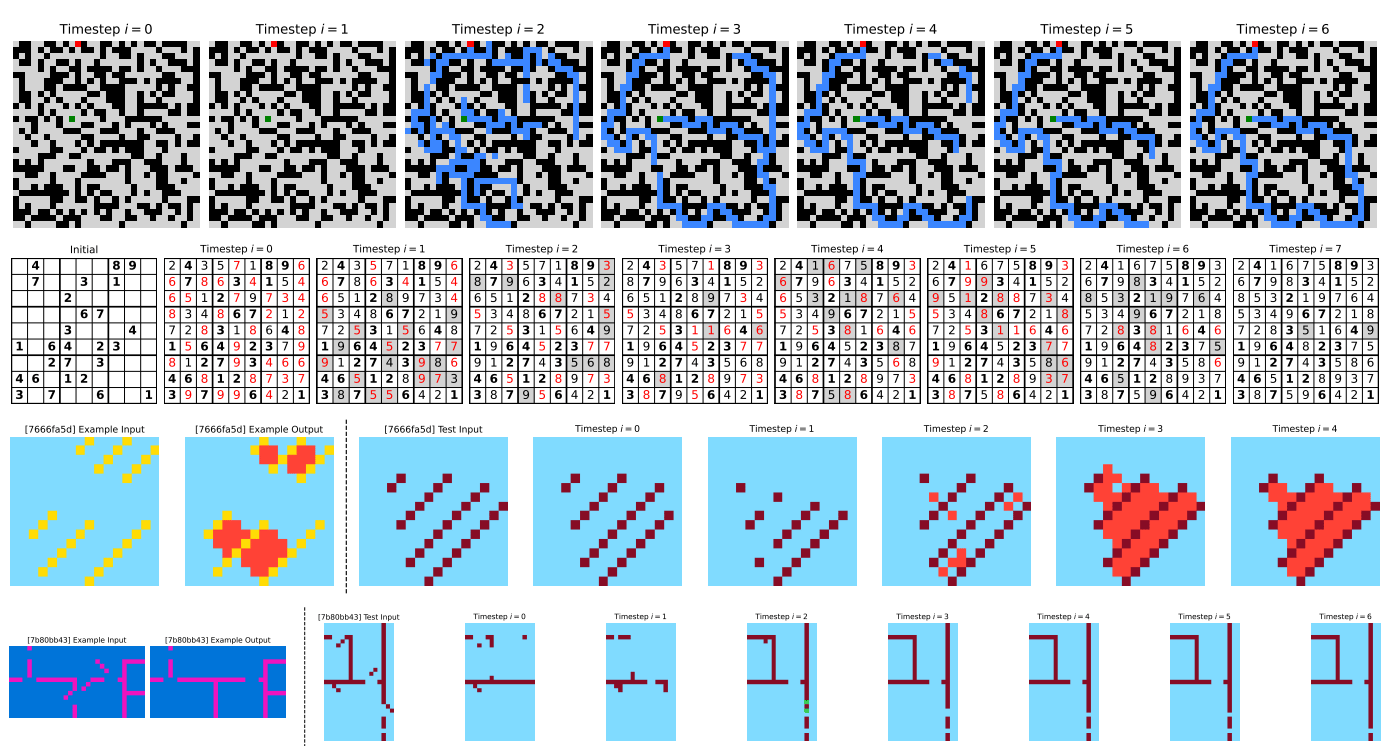

The AI scaling race just hit a wall hard. While tech giants throw billions of parameters and massive datasets at reasoning problems, a Hierarchical Reasoning Model (HRM) with just 27 million parameters is achieving what they can't: actual deep reasoning. Using brain-inspired architecture and only 1000 training examples per task, it's solving complex puzzles that completely stump models 1000x its size.

2. Technology Used

Core Innovation:

Hierarchical Architecture: Two interdependent recurrent modules mimicking the brain's multi-timescale processing—a slow "high-level" module for abstract planning and a fast "low-level" module for detailed computations

Hierarchical Convergence: Prevents premature convergence by resetting the low-level module after each high-level update, maintaining computational depth over many timesteps

One-Step Gradient Approximation: Memory-efficient training that requires O(1) memory instead of BPTT's O(T), making it scalable and biologically plausible

Key Capacities:

Deep Supervision: Multiple forward passes with feedback at each segment for enhanced stability.

Adaptive Computation Time: Dynamic "thinking time" allocation based on problem complexity using Q-learning.

Inference-Time Scaling: Performance improves with additional computational resources during inference

Validation Results:

ARC-AGI Challenge: 40.3% accuracy vs Claude 3.7's 21.2% and o3-mini-high's 34.5%

Sudoku-Extreme: 55% accuracy where all other models scored 0%

30×30 Maze Navigation: 74.5% accuracy where all others completely failed

Neurobiological Validation: Reproduces hierarchical dimensionality patterns observed in mouse cortex

Why it matters:

The reasoning ceiling that's been limiting AI has been shattered. While billion-parameter models burn through massive datasets and computational resources only to fail at basic logic puzzles, HRM proves that architecture matters more than scale. With 1000x fewer parameters and a fraction of the training data, it's solving problems that stump the giants. This isn't just an incremental improvement—it's a fundamental breakthrough that rewrites the rules of AI reasoning and points toward truly intelligent systems that think like brains, not search engines.

Paper: Hierarchical Reasoning Model | Training Data: Only 1000 examples per task, no pre-training required

NEWLY LAUNCH AI TOOLS

Trending AI tools

💬 Scribe - ElevenLabs' new SOTA speech-to-text model

🪨 Granite 3.2 - IBM's compact open models for enterprise use

🗣️ Octave TTS - Generate AI voices with emotional delivery

🧑🔬 Deep Review - AI co-scientist for literature reviews

Source:RundownAI