src:research paper

I have been reviewing AI products and AI-related research papers, but something's been bothering me.

We are developing AI models that can do almost everything from code, writing, videos, and creating art on a Python language that was not solely meant for it. To empower the capacity of the language, we're attaching huge libraries like TensorFlow and PyTorch to make it work.

Meanwhile, there are LISP and Prolog they seem old but were built for pure logic and reasoning. The disadvantage is they can't handle the scale and learning capabilities that modern deep learning requires.

So we have two AI worlds:

Neural AI: Scalable, learns from data, but it's a black box that makes stuff up (aka hallucinates).

Symbolic AI: Logical, transparent, reliable, but brittle and doesn't scale.

We've been trying to duct-tape these two together for years with something called "neurosymbolic AI," but it often gives us the worst of both worlds.

I have good news: new research by top AI researcher Pedro Domingos from the University of Washington just dropped, and it gives answers to the problem. It's called Tensor Logic: The Language of AI. I've read it and found an excellent approach.

Key Highlights:

Single Building Block for All AI: Instead of PyTorch for neural networks, Prolog for logic, and other frameworks for probabilistic models, Tensor Logic uses one construct—the tensor equation—for everything. Write transformers, logical rules, and statistical models all in the same unified language.

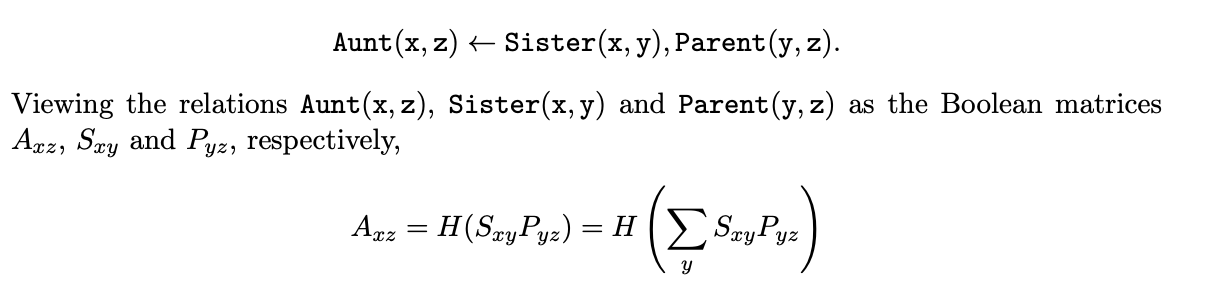

Neural Networks and Logic Are Mathematical Twins: The paper shows that logical rules (like in databases and expert systems) and tensor operations (like in deep learning) are fundamentally the same operation. A simple database join is mathematically identical to multiplying tensors—they just use different data types (Boolean vs. numbers).

Transformers Become 12 Equations: The complex transformer architecture behind ChatGPT that normally requires hundreds of lines of PyTorch code? It becomes just 12 simple tensor equations. Clearer to understand, easier to modify, more transparent to debug.

Reasoning Without Hallucinations: Tensor Logic can perform logical reasoning directly in embedding space. At low temperature settings, you get mathematically guaranteed correct deductions no hallucinations. Increase the temperature, and the system can generalize across similar concepts while maintaining logical grounding.

Why It Matters:

The Fragmentation Problem Is Expensive

Every AI developer knows the pain: you learn PyTorch for neural networks, then need to bolt on separate tools for reasoning, then add another framework for probabilistic inference. Each integration is a custom hack. Maintenance becomes a nightmare. Teams spend months just getting incompatible systems to communicate.

Tensor Logic proposes we've been fighting the wrong battle. The fragmentation isn't necessary neural and symbolic AI are different perspectives on identical mathematical foundations.

Practical Impact for Developers

Learning curve collapses. One language replaces the entire fragmented ecosystem. Code becomes dramatically simpler what takes hundreds of lines in current frameworks becomes a dozen equations. More importantly, the code maps directly to the underlying mathematics, making it easier to reason about, debug, and extend.

Solving the Hallucination Crisis

Language models make things up because they're pure pattern matchers without logical constraints. They generate plausible-sounding nonsense. Tensor Logic enables reasoning in embedding space with controllable soundness. Set temperature to zero: pure deduction, mathematically guaranteed correctness. Adjust upward: controlled analogical reasoning that generalizes while staying grounded in logic.

This is exponentially more powerful than retrieval-augmented generation. Instead of just retrieving facts from a database, the system retrieves the logical consequences of those facts under specified rules.

Transparency We Actually Need

Current neural networks are inscrutable black boxes. You can't see why they made a decision. Symbolic systems are transparent but can't learn from data. Tensor Logic unifies them you get learned, adaptive systems where you can extract and examine the reasoning process at any point. See exactly which rules fired, which inferences were drawn, why the system reached each conclusion.

For high-stakes applications (medical diagnosis, legal reasoning, financial decisions), this transparency isn't optional it's mandatory.

Beyond AI: Scientific Computing Gets Simpler

Scientists translate mathematical equations into code constantly. Current process: write equations on paper, then translate to NumPy/MATLAB/etc. with significant cognitive overhead. Tensor Logic offers near one-to-one correspondence between mathematical notation and executable code. Physics simulations, climate models, computational chemistry all become more direct to express and easier to verify for correctness.

The Adoption Question

Fields take off when they find their language. Physics needed calculus. Electrical engineering needed complex numbers. The web needed HTML. Has AI found its language?

Python works but wasn't designed for AI. PyTorch and TensorFlow are powerful but address only part of the problem. Tensor Logic argues the answer is recognizing mathematical unity where we've been seeing fragmentation.

The path forward is backward-compatible: start as an improved einsum within Python, gradually absorbing NumPy and PyTorch capabilities, eventually superseding them as the benefits become undeniable.

Success depends on whether the AI community embraces mathematical unification over continued duct-taping. But after years of incompatible systems held together with hacks, perhaps it's time to build on solid foundations instead.

Paper: Read More | Learn More: tensor-logic.org